Add durable workflows to your code in minutes. Make apps resilient to any failure. From vibe coded software to production ready in minutes.

Write your business logic in normal code, with branches, loops, subtasks, and retries. DBOS makes it resilient to any failure.

Consume events exactly-once, no need to worry about timeouts or offsets.

Schedule your durable workflows to run exactly once per time interval. Record a stock's price once a minute, migrate some data once every hour, or send emails to inactive users once a week.

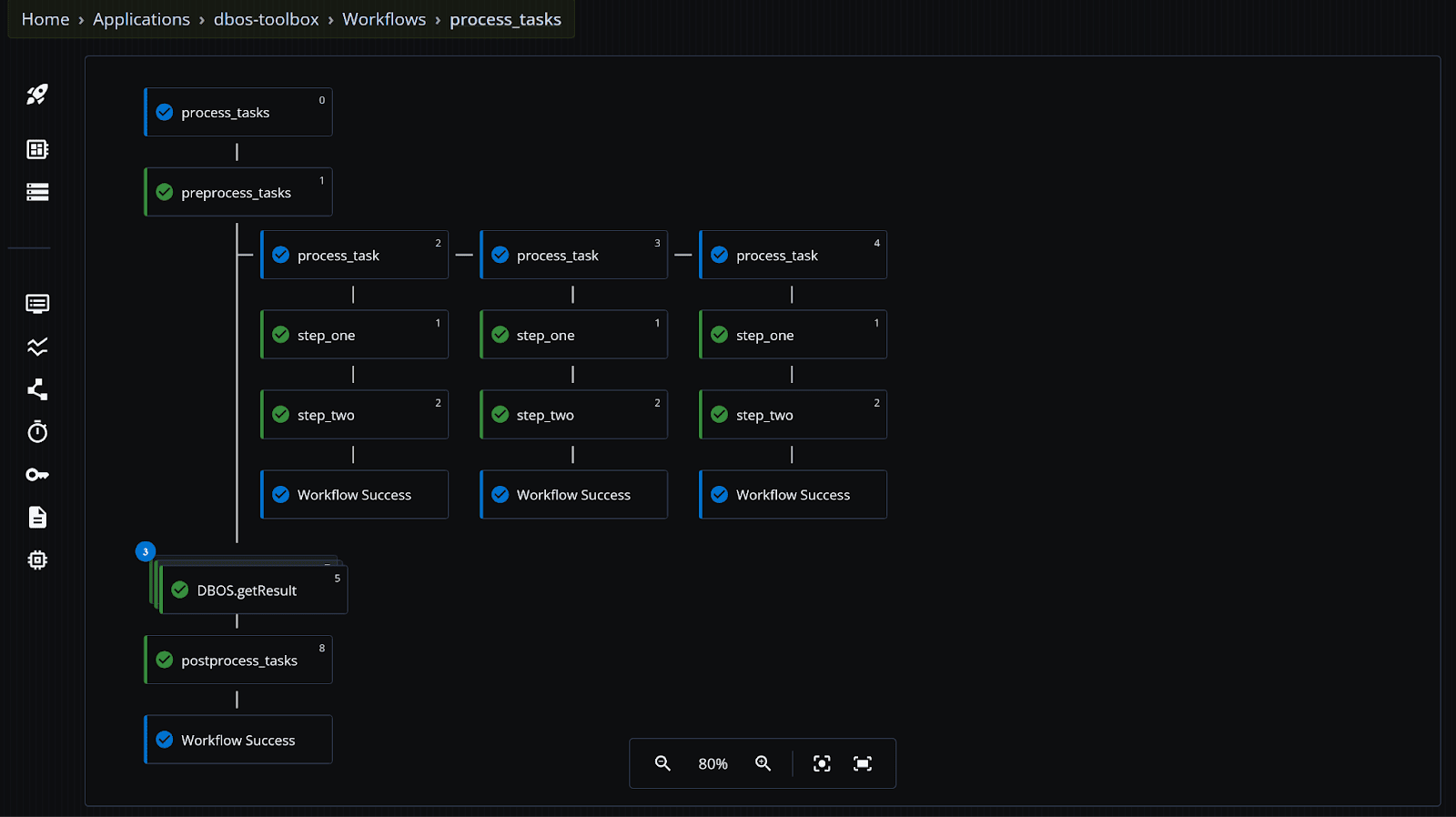

Build data pipelines that are reliable and observable by default. DBOS durable queues guarantee all your tasks complete.

Use durable workflows to build reliable, fault-tolerant AI agents.

Effortlessly mix synchronous webhook code with asynchronous event processing. Reliably wait weeks or months for events, then use idempotency and durable execution to process them exactly once.

Add durable workflows to your app in just a few lines of code. No additional infrastructure required.

Run anywhere, from your own hardware to any cloud. No new infrastructure required.

Add a few annotations to your code to make it durable. Nothing else needed.

We never access your data. It stays private and under your control

Add a few annotations to your code to make it durable.

So if your application crashes or restarts, it automatically resumes your workflows from the last completed step.

CEO & Co-Founder, Yutori.ai

CEO & Co-Founder

Never lose progress—functions resume from the last successful step, even after crashes.

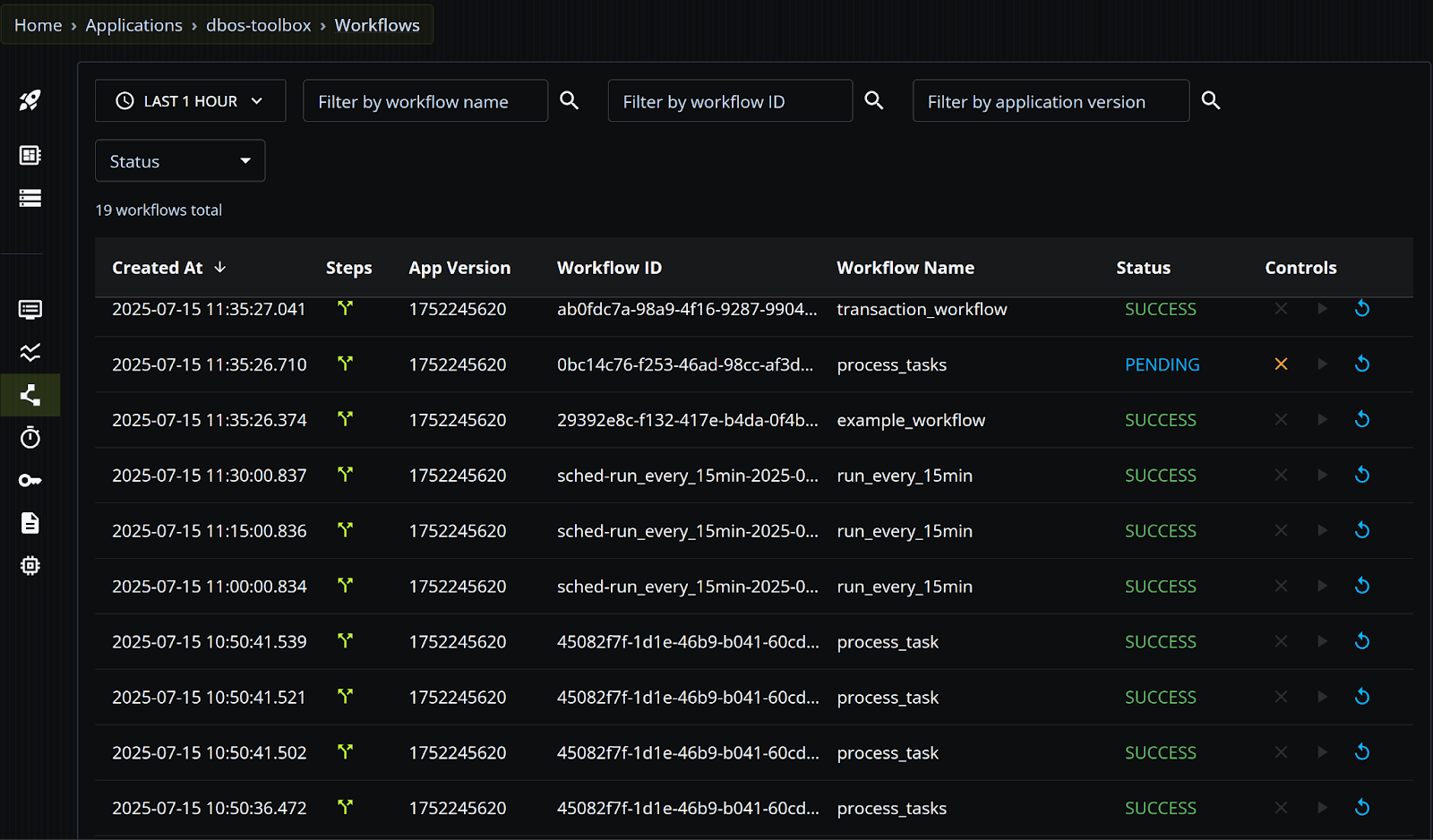

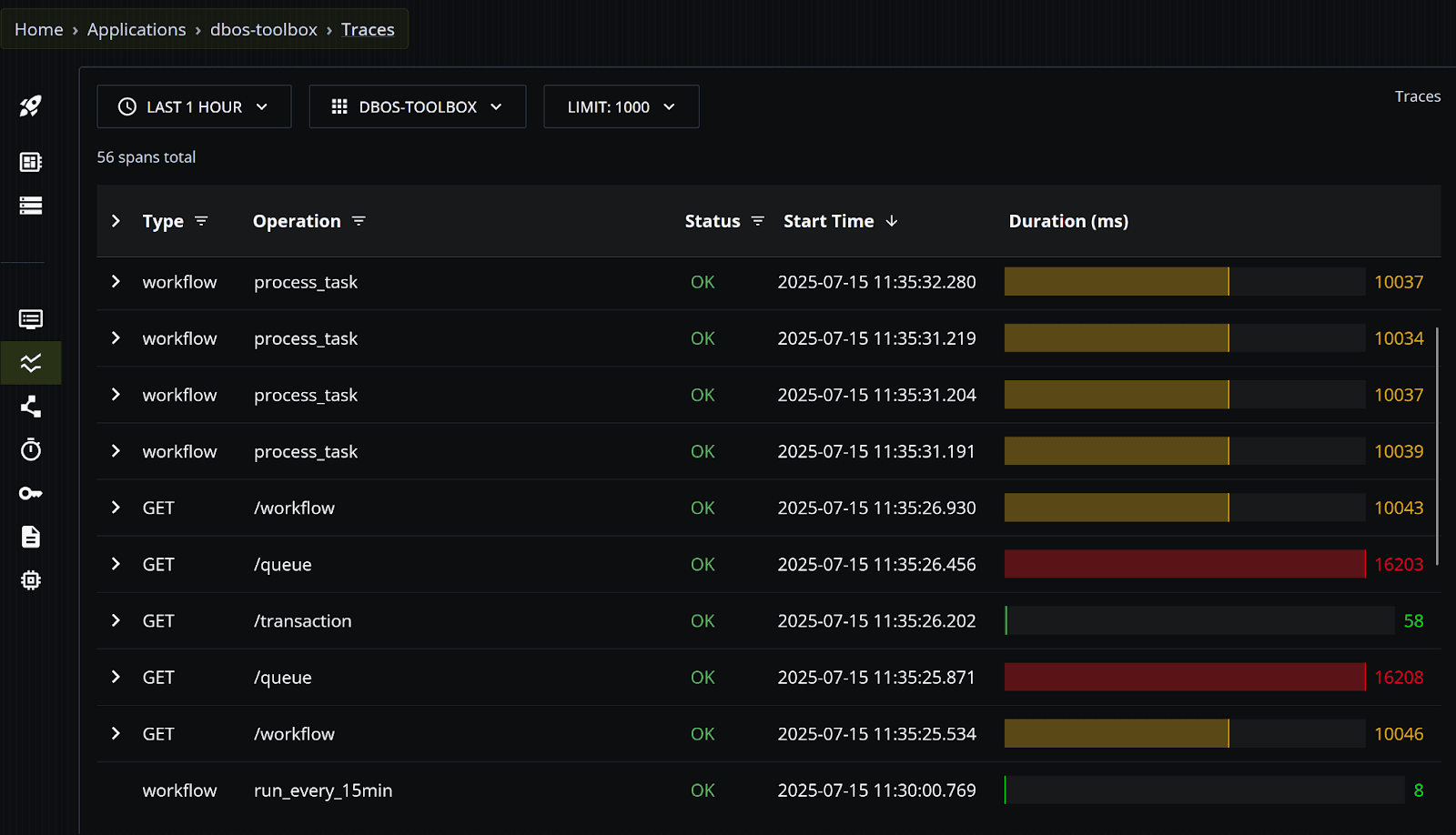

Interactively view, search, and manage your workflows from a graphical UI

Lightweight, durable, distributed queues backed by Postgres

DBOS will automatically scale your application to meet requests and notify you as limits approach.

Run your workflows on any infrastructure. Cloud, on-prem, or containers.

Replace brittle cron jobs with reliable and observable workflows.

VP Technology, TMG.io

We prioritize security, privacy, and reliability, so your team can build with confidence, not manage infrastructure. Our platform meets the security and compliance standards you need to run mission-critical workflows at scale, ensuring seamless execution and enterprise-grade protection.

Audited regularly for compliance with your B2B solution.

Your data stays in your control. DBOS never sees your data, and you can keep your data wherever it is safest and most compliant for your use case.

Secure your account with single sign-on and SAML.

DBOS has been put to the test and designed to scale so your team can just focus on building a great product.

DBOS has been built with healthcare in mind to handle sensitive data.

Get started with a range of templates, and make your backend more durable, cost-efficient and easier to maintain.

Use DBOS to build an AI deep research agent searching Hacker News.

Use DBOS to build a reliable and scalable doc ingestion pipeline for your chat agent.

Use durable workflows to build an online storefront that's resilient to any failure.

Tooling and hosting to make your DBOS Transact deployments a success.

Ready for any platform.

Tooling to operate DBOS Transact applications anywhere.

A seriously fast serverless platform for DBOS Transact applications.

DBOS users get access to real-time help and open discussions.

Join the DBOS community and shape a new era in durable systems.

Use the open source DBOS Transact library, free forever.

Pair it with DBOS Pro, with a free 30-day trial.