Durable execution software does three important things:

- It makes software resilient to failures (like crashes, logic errors, flaky backends, etc.), and ensures that workflows interrupted by a failure resume executing as if the failure did not occur.

- It minimizes the negative effects of failures, like lost revenue, lost data, and lost time.

- It speeds up development and minimizes technical debt by reducing the amount of error handling code you have to write.

In a nutshell, faster development, better quality, less risk.

However, if you’ve ever read a discussion about durable execution online, someone almost always asks “what’s the use case for it?”

It has always seemed like a strange question to me. I mean, who doesn’t want their software to run reliably and correctly? Shouldn’t durability be the default?

Then I realized, historically speaking, using a durable execution system is a BIG design decision. You’re thinking that you have to develop it yourself (oof–many developer-months to create) or use a durable execution service.

Before DBOS entered the market, the top options for durable execution included AWS Step Functions and Temporal. But using those systems require you to:

- Rearchitect your applications - the parts of your application that need to execute durably must be implemented and run separate from your application code, on an external workflow orchestration service.

- Pay to execute every step of every workflow - if you’re paying to use workflow orchestration as a service, then you’re paying for every step executed by every workflow. It becomes very costly as your application scales up.

- Host and scale a separate durable execution server - If you’re self-hosting a durable workflow execution server like Temporal, you need to host and scale it (and the Cassandra DBMS it uses to store state), which requires several full-time employees to operate.

If you find yourself asking “do I need durable execution,” because of the high financial and cognitive costs of this design decision, it’s because you’re looking at legacy solutions.

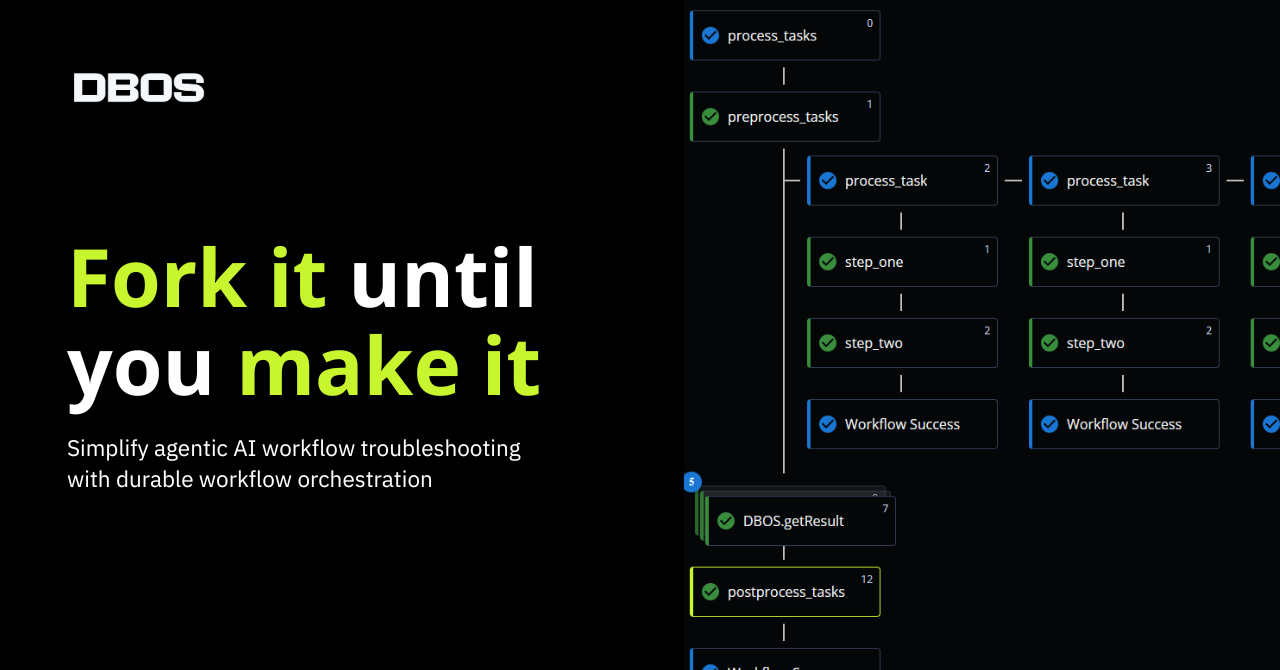

Enter DBOS: Durability by Default

DBOS was designed to be simple and lightweight enough to make every application durable. It’s an open source library that makes workflows and queues in your code durable; it just needs access to a Postgres compatible database in which to store state (it can be your application database). When an app recovers from a crash, the library retrieves queues and unfinished workflows from Postgres and resumes execution as if the failure never occurred.

Here’s what makes DBOS simple enough to use in any software project where reliability and correctness matter:

No rearchitecture required

The open source DBOS Transact library makes your code durable in place. No need to build and run durable and non-durable parts of your code on separate platforms.

No external service to host

No need to pay for a workflow orchestration service or host a separate workflow orchestration server and dedicated DBMS.

No per-step cost

DBOS Transact is free, open source that you can deploy anywhere. If you use the optional DBOS Conductor functionality to facilitate self hosting, observability, and failure remediation, you pay per DBOS Transact application instance, not per workflow step.

If quality matters to you and access to a Postgres-compatible DB is handy, then free yourself from agonizing durability design decisions and consider a durable by default approach with DBOS.

Still wondering about durable execution use cases?

There are two things to consider:

- How likely will 💩will hit the fan if the software fails?

- Is it customer- or market-facing like SaaS or ecommerce? Failure will lose revenue and 💩will certainly fly.

- Is it automating anything in real time (AI agents!!) - failure will halt operations or cause business errors. Again, don your hazmat suit.

- How much 💩will hit the fan if the software fails?

- Really just a matter of scale, the more revenue loss or data corruption/loss that results from failure per unit of time failed, the more important reliability becomes.

What you don’t have to consider is the cost of making your software durable. Just import the DBOS Transact library into your code, add a few annotations to your source, connect to Postgres, and you’re good to go.